bagging predictors. machine learning

Then ensemble methods were born which involve using many. Support Vector Machine.

Ensemble Learning Explained Part 1 By Vignesh Madanan Medium

Machine Learning for.

. The k-fold cross-validation procedure is used to estimate the performance of machine learning models when making predictions on data not used during training. Machine Learning is a part of Data Science an area that deals with statistics algorithmics and similar scientific methods used for knowledge extraction. What is Boosting in Machine Learning.

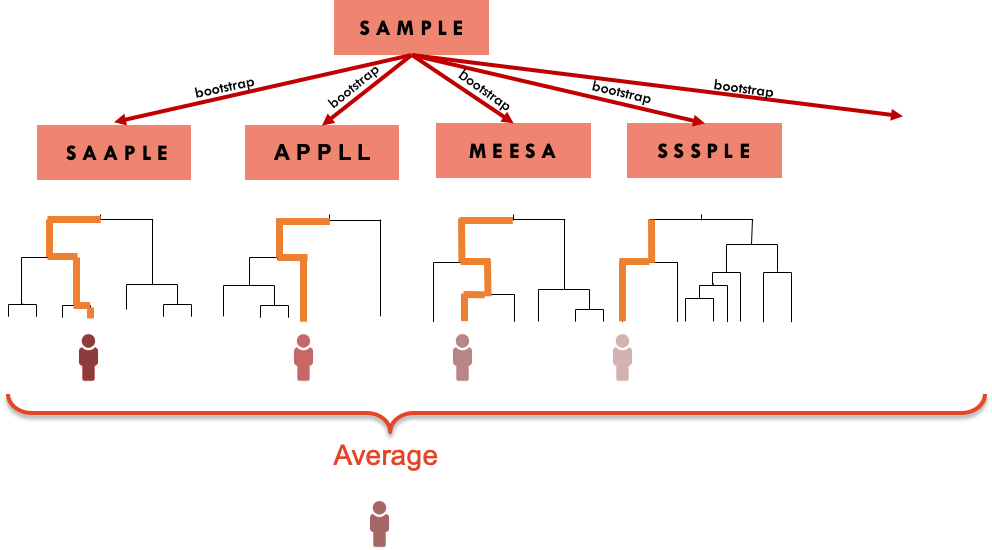

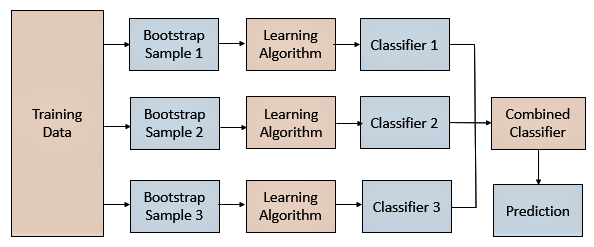

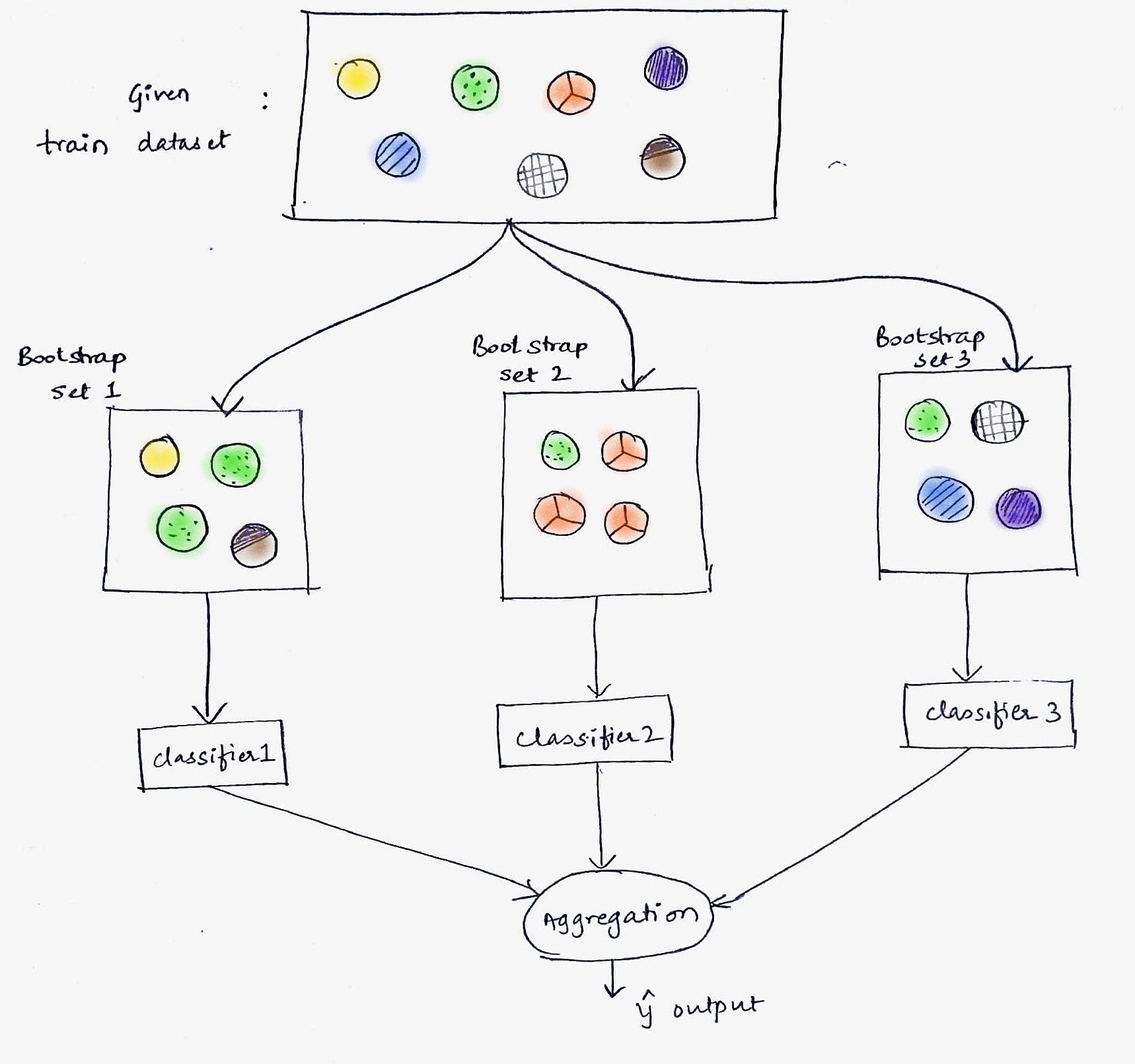

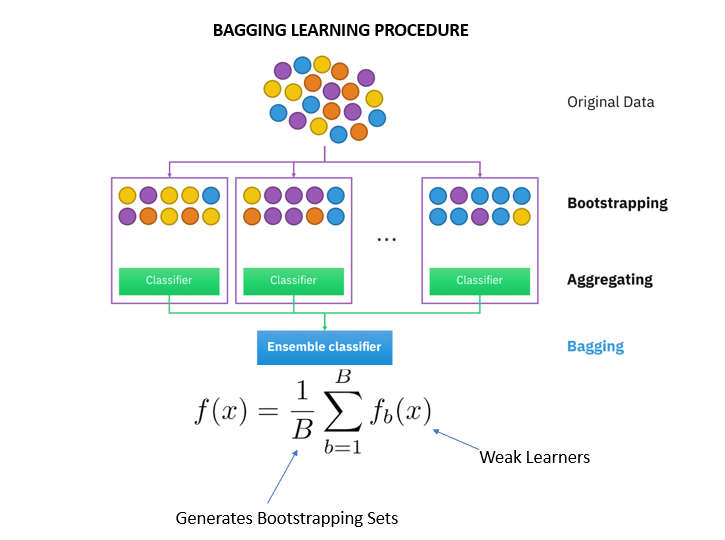

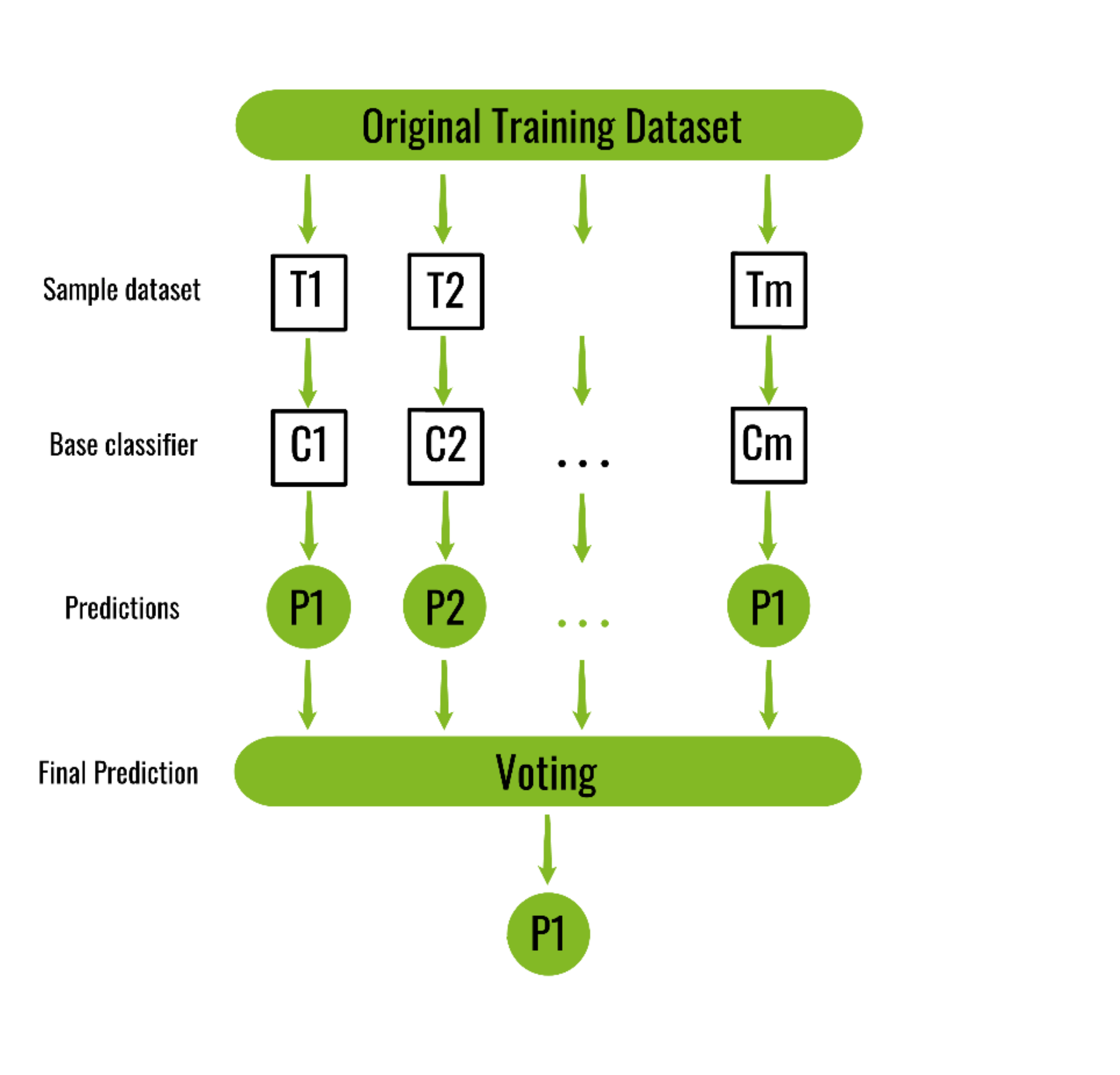

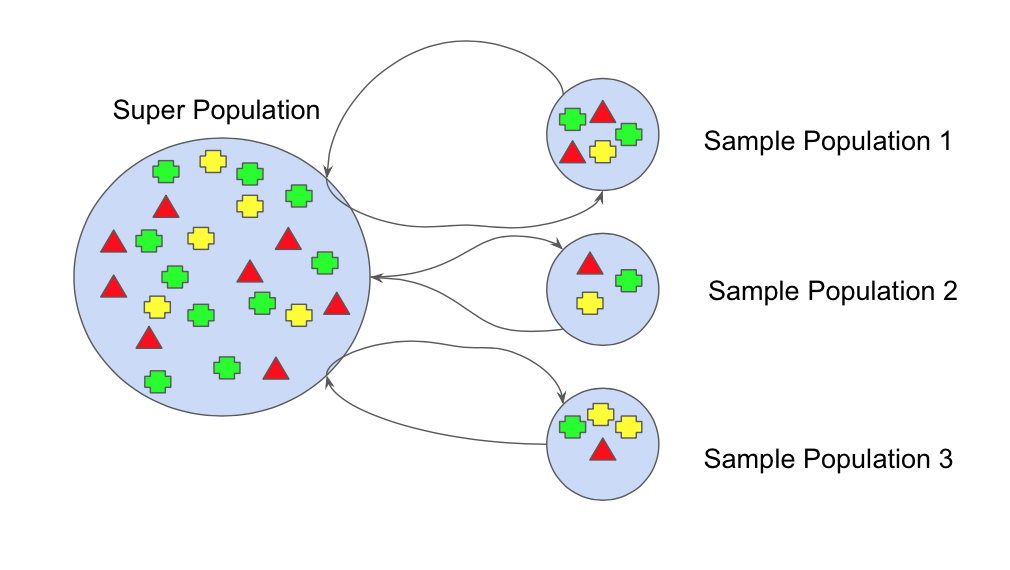

When bootstrap aggregating is performed two independent sets are created. In statistics and machine learning ensemble methods use multiple learning algorithms to obtain better predictive performance than could be obtained from any of the constituent learning algorithms alone. They have become a very popular out-of-the-box or off-the-shelf learning algorithm that enjoys good predictive performance with relatively little hyperparameter tuning.

Chapter 11 Random Forests. Here we discuss what is feature selection and machine learning and steps to select data point in feature selection. In fact the easiest part of machine learning is coding.

If you are new to machine learning the random forest algorithm should be on your tips. Random forests are a modification of bagged decision trees that build a large collection of de-correlated trees to further improve predictive performance. Tr a ditionally building a Machine Learning application consisted on taking a single learner like a Logistic Regressor a Decision Tree Support Vector Machine or an Artificial Neural Network feeding it data and teaching it to perform a certain task through this data.

Unlike a statistical ensemble in statistical mechanics which is usually infinite a machine learning ensemble consists of only a concrete finite set of alternative models but. One set the bootstrap sample is the data chosen to be in-the-bag by sampling with replacement. Its ability to solveboth regression and classification problems along with robustness to correlated features and variable importance plot gives us enough head start to solve various problems.

The SVM algorithms purpose is to find the optimum line or decision boundary for categorizing n. The Support Vector Machine or SVM is a common Supervised Learning technique that may be used to solve both classification and regression issuesHowever it is mostly utilized in Machine Learning for Classification difficulties. Interest 6 Ýfor a new instance with feature predictors denoted by.

This procedure can be used both when optimizing the hyperparameters of a model on a dataset and when comparing and selecting a model for the dataset. This is a guide to Machine Learning Feature Selection. The out-of-bag set is all data not chosen in the sampling process.

Engineers can use ML models to replace complex explicitly-coded decision-making processes by providing equivalent or similar procedures learned in an automated manner from dataML offers smart. Machine Random Survival Forests Bagging Survival Trees Active Learning Transfer Learning Multi-Task Learning Early Prediction Data Transformation Complex Events Calibration. This feature selection process takes a bigger role in machine learning problems to solve the complexity in it.

When the same cross-validation.

Reporting Of Prognostic Clinical Prediction Models Based On Machine Learning Methods In Oncology Needs To Be Improved Journal Of Clinical Epidemiology

Bayesian Analysis For Logistic Regression Model Machinelearning Datascience Ai Bayesian Http Www Mathworks Com H Logistic Regression Regression Analysis

2 Bagging Machine Learning For Biostatistics

Schematic Of The Machine Learning Algorithm Used In This Study A A Download Scientific Diagram

An Introduction To Bagging In Machine Learning Statology

Bagging Vs Boosting In Machine Learning Geeksforgeeks

What Is Bagging In Machine Learning And How To Perform Bagging

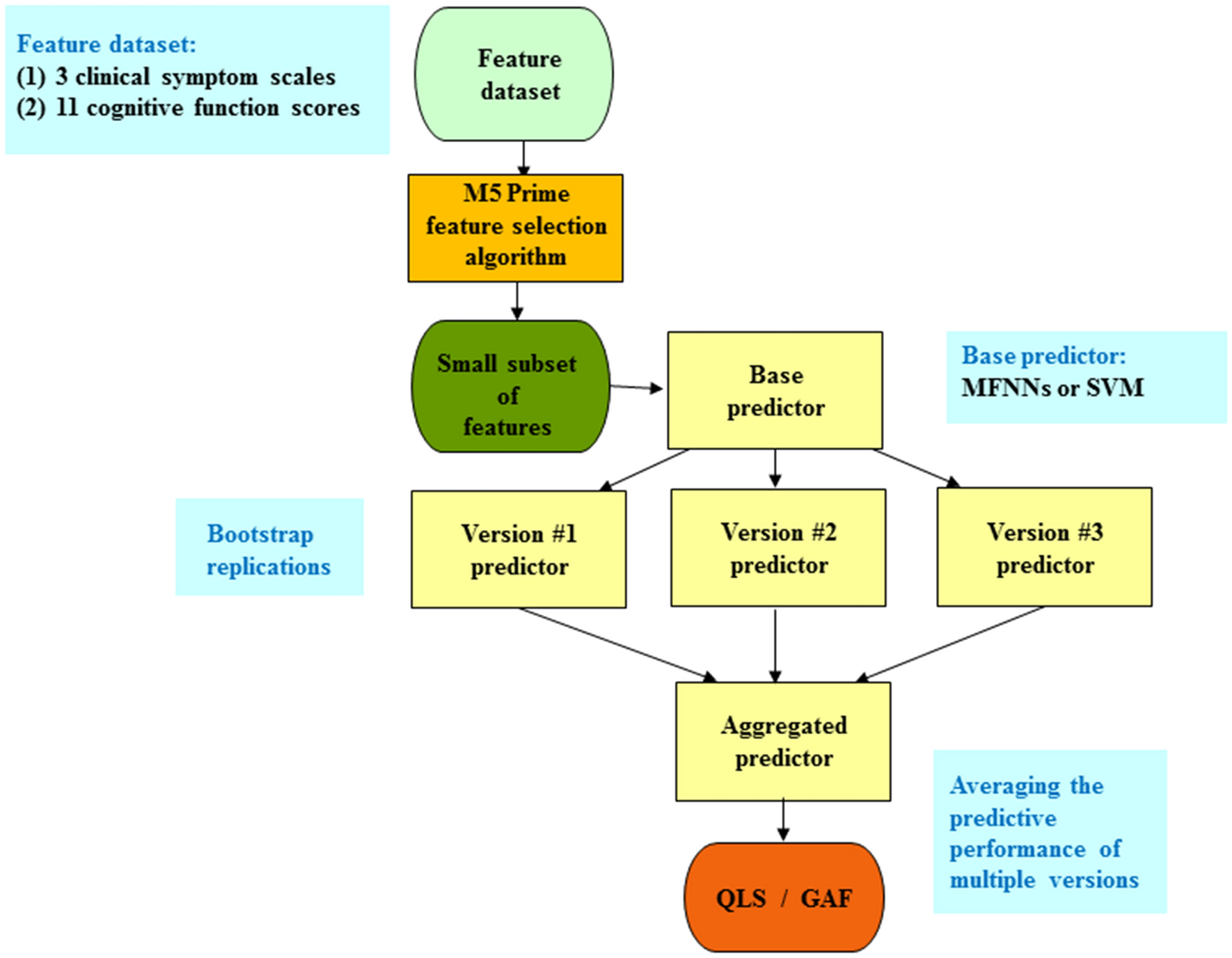

Applying A Bagging Ensemble Machine Learning Approach To Predict Functional Outcome Of Schizophrenia With Clinical Symptoms And Cognitive Functions Scientific Reports

Ensemble Learning Bagging And Boosting In Machine Learning Pianalytix Machine Learning

Bagging Bootstrap Aggregation Overview How It Works Advantages

How To Create A Bagging Ensemble Of Deep Learning Models By Nutan Medium

Bagging Machine Learning Through Visuals 1 What Is Bagging Ensemble Learning By Amey Naik Machine Learning Through Visuals Medium

Ensemble Methods In Machine Learning Bagging Versus Boosting Pluralsight

Application Of Machine Learning Program In Training And Test Prediction Download Scientific Diagram

Ensemble Machine Learning Explained In Simple Terms

Bagging Vs Boosting In Machine Learning Geeksforgeeks

Ml Bagging Classifier Geeksforgeeks

Bagging And Pasting In Machine Learning Data Science Python

Bagging Classifier Instead Of Running Various Models On A By Pedro Meira Time To Work Medium